The functionality resides in cvaux library. To use it in your application, place #include "cvaux.h" in your source files and:

The object detector described below has been initially proposed by Paul Viola [Viola01] and improved by Rainer Lienhart [Lienhart02]. First, a classifier (namely a cascade of boosted classifiers working with haar-like features) is trained with a few hundreds of sample views of a particular object (i.e., a face or a car), called positive examples, that are scaled to the same size (say, 20x20), and negative examples - arbitrary images of the same size.

After a classifier is trained, it can be applied to a region of interest (of the same size as used during the training) in an input image. The classifier outputs a "1" if the region is likely to show the object (i.e., face/car), and "0" otherwise. To search for the object in the whole image one can move the search window across the image and check every location using the classifier. The classifier is designed so that it can be easily "resized" in order to be able to find the objects of interest at different sizes, which is more efficient than resizing the image itself. So, to find an object of an unknown size in the image the scan procedure should be done several times at different scales.

The word "cascade" in the classifier name means that the resultant classifier

consists of several simpler classifiers (stages) that are applied

subsequently to a region of interest until at some stage the candidate

is rejected or all the stages are passed. The word

"boosted" means that the classifiers at every stage of the cascade are complex

themselves and they are built out of basic classifiers using one of four

different boosting techniques (weighted voting). Currently

Discrete Adaboost, Real Adaboost, Gentle Adaboost and Logitboost are supported.

The basic classifiers are decision-tree classifiers with at least

2 leaves. Haar-like features are the input to the basic classifers, and

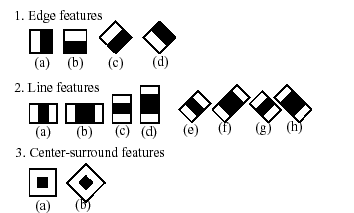

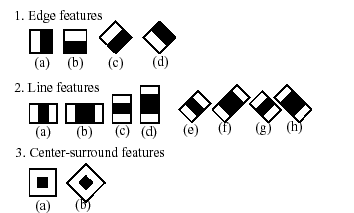

are calculated as described below. The current algorithm uses the following

Haar-like features:

The feature used in a particular classifier is specified by its shape (1a, 2b etc.), position within the region of interest and the scale (this scale is not the same as the scale used at the detection stage, though these two scales are multiplied). For example, in case of the third line feature (2c) the response is calculated as the difference between the sum of image pixels under the rectangle covering the whole feature (including the two white stripes and the black stripe in the middle) and the sum of the image pixels under the black stripe multiplied by 3 in order to compensate for the differences in the size of areas. The sums of pixel values over a rectangular regions are calculated rapidly using integral images (see below and cvIntegral description).

To see the object detector at work, have a look at HaarFaceDetect demo.

The following reference is for the detection part only. There is a separate application called haartraining that can train a cascade of boosted classifiers from a set of samples. See opencv/apps/haartraining for details.

Boosted Haar classifier structures

#define CV_HAAR_FEATURE_MAX 3

/* a haar feature consists of 2-3 rectangles with appropriate weights */

typedef struct CvHaarFeature

{

int tilted; /* 0 means up-right feature, 1 means 45--rotated feature */

/* 2-3 rectangles with weights of opposite signs and

with absolute values inversely proportional to the areas of the rectangles.

if rect[2].weight !=0, then

the feature consists of 3 rectangles, otherwise it consists of 2 */

struct

{

CvRect r;

float weight;

} rect[CV_HAAR_FEATURE_MAX];

} CvHaarFeature;

/* a single tree classifier (stump in the simplest case) that returns the response for the feature

at the particular image location (i.e. pixel sum over subrectangles of the window) and gives out

a value depending on the responce */

typedef struct CvHaarClassifier

{

int count;

/* number of nodes in the decision tree */

CvHaarFeature* haarFeature;

/* these are "parallel" arrays. Every index i

corresponds to a node of the decision tree (root has 0-th index).

left[i] - index of the left child (or negated index if the left child is a leaf)

right[i] - index of the right child (or negated index if the right child is a leaf)

threshold[i] - branch threshold. if feature responce is <= threshold, left branch

is chosen, otherwise right branch is chosed.

alpha[i] - output value correponding to the leaf. */

float* threshold; /* array of decision thresholds */

int* left; /* array of left-branch indices */

int* right; /* array of right-branch indices */

float* alpha; /* array of output values */

}

CvHaarClassifier;

/* a boosted battery of classifiers(=stage classifier):

the stage classifier returns 1

if the sum of the classifiers' responces

is greater than threshold and 0 otherwise */

typedef struct CvHaarStageClassifier

{

int count; /* number of classifiers in the battery */

float threshold; /* threshold for the boosted classifier */

CvHaarClassifier* classifier; /* array of classifiers */

}

CvHaarStageClassifier;

/* cascade of stage classifiers */

typedef struct CvHaarClassifierCascade

{

int count; /* number of stages */

CvSize origWindowSize; /* original object size (the cascade is trained for) */

CvHaarStageClassifier* stageClassifier; /* array of stage classifiers */

}

CvHaarClassifierCascade;

All the structures are used for representing a cascaded of boosted Haar classifiers. The cascade has the following hierarchical structure:

Cascade:

Stage1:

Classifier11:

Feature11

Classifier12:

Feature12

...

Stage2:

Classifier21:

Feature21

...

...

The whole hierarchy can be constructed manually or loaded from a file or an embedded base using function cvLoadHaarClassifierCascade.

Loads a trained cascade classifier from file or the classifier database embedded in OpenCV

CvHaarClassifierCascade*

cvLoadHaarClassifierCascade( const char* directory="<default_face_cascade>",

CvSize origWindowSize=cvSize(24,24));

The function cvLoadHaarClassifierCascade loads a trained cascade of haar classifiers from a file or the classifier database embedded in OpenCV. The base can be trained using haartraining application (see opencv/apps/haartraining for details).

Releases haar classifier cascade

void cvReleaseHaarClassifierCascade( CvHaarClassifierCascade** cascade );

The function cvReleaseHaarClassifierCascade deallocates the cascade that has been created manually or by cvLoadHaarClassifierCascade.

Converts boosted classifier cascade to internal representation

/* hidden (optimized) representation of Haar classifier cascade */

typedef struct CvHidHaarClassifierCascade CvHidHaarClassifierCascade;

CvHidHaarClassifierCascade*

cvCreateHidHaarClassifierCascade( CvHaarClassifierCascade* cascade,

const CvArr* sumImage=0,

const CvArr* sqSumImage=0,

const CvArr* tiltedSumImage=0,

double scale=1 );

The function cvCreateHidHaarClassifierCascade converts pre-loaded cascade to internal faster representation. This step must be done before the actual processing. The integral image pointers may be NULL, in this case the images should be assigned later by cvSetImagesForHaarClassifierCascade.

Releases hidden classifier cascade structure

void cvReleaseHidHaarClassifierCascade( CvHidHaarClassifierCascade** cascade );

The function cvReleaseHidHaarClassifierCascade deallocates structure that is an internal ("hidden") representation of haar classifier cascade.

Detects objects in the image

typedef struct CvAvgComp

{

CvRect rect; /* bounding rectangle for the face (average rectangle of a group) */

int neighbors; /* number of neighbor rectangles in the group */

}

CvAvgComp;

CvSeq* cvHaarDetectObjects( const IplImage* img, CvHidHaarClassifierCascade* cascade,

CvMemStorage* storage, double scale_factor=1.1,

int min_neighbors=3, int flags=0 );

min_neighbors-1 are rejected.

If min_neighbors is 0, the function does not any

grouping at all and returns all the detected candidate rectangles,

which may be useful if the user wants to apply a customized grouping procedure.

CV_HAAR_DO_CANNY_PRUNING.

If it is set, the function uses Canny edge detector to reject some image

regions that contain too few or too much edges and thus can not contain the

searched object. The particular threshold values are tuned for face detection

and in this case the pruning speeds up the processing.

The function cvHaarDetectObjects finds

rectangular regions in the given image that are likely to contain objects

the cascade has been trained for and returns those regions as

a sequence of rectangles. The function scans the image several

times at different scales (see

cvSetImagesForHaarClassifierCascade). Each time it considers

overlapping regions in the image and applies the classifiers to the regions

using cvRunHaarClassifierCascade.

It may also apply some heuristics to reduce number of analyzed regions, such as

Canny prunning. After it has proceeded and collected the candidate rectangles

(regions that passed the classifier cascade), it groups them and returns a

sequence of average rectangles for each large enough group. The default

parameters (scale_factor=1.1, min_neighbors=3, flags=0)

are tuned for accurate yet slow face detection. For faster face detection on

real video images the better settings are (scale_factor=1.2, min_neighbors=2,

flags=CV_HAAR_DO_CANNY_PRUNING).

#include "cv.h"

#include "cvaux.h"

#include "highgui.h"

CvHidHaarClassifierCascade* new_face_detector(void)

{

CvHaarClassifierCascade* cascade = cvLoadHaarClassifierCascade("<default_face_cascade>", cvSize(24,24));

/* images are assigned inside cvHaarDetectObject, so pass NULL pointers here */

CvHidHaarClassifierCascade* hid_cascade = cvCreateHidHaarClassifierCascade( cascade, 0, 0, 0, 1 );

/* the original cascade is not needed anymore */

cvReleaseHaarClassifierCascade( &cascade );

return hid_cascade;

}

void detect_and_draw_faces( IplImage* image,

CvHidHaarClassifierCascade* cascade,

int do_pyramids )

{

IplImage* small_image = image;

CvMemStorage* storage = cvCreateMemStorage(0);

CvSeq* faces;

int i, scale = 1;

/* if the flag is specified, down-scale the input image to get a

performance boost w/o loosing quality (perhaps) */

if( do_pyramids )

{

small_image = cvCreateImage( cvSize(image->width/2,image->height/2), IPL_DEPTH_8U, 3 );

cvPyrDown( image, small_image, CV_GAUSSIAN_5x5 );

scale = 2;

}

/* use the fastest variant */

faces = cvHaarDetectObjects( small_image, cascade, storage, 1.2, 2, CV_HAAR_DO_CANNY_PRUNING );

/* draw all the rectangles */

for( i = 0; i < faces->total; i++ )

{

/* extract the rectanlges only */

CvRect face_rect = *(CvRect*)cvGetSeqElem( faces, i, 0 );

cvRectangle( image, cvPoint(face_rect.x*scale,face_rect.y*scale),

cvPoint((face_rect.x+face_rect.width)*scale,

(face_rect.y+face_rect.height)*scale),

CV_RGB(255,0,0), 3 );

}

if( small_image != image )

cvReleaseImage( &small_image );

cvReleaseMemStorage( &storage );

}

/* takes image filename from the command line */

int main( int argc, char** argv )

{

IplImage* image;

if( argc==2 && (image = cvLoadImage( argv[1], 1 )) != 0 )

{

CvHidHaarClassifierCascade* cascade = new_face_detector();

detect_and_draw_faces( image, cascade, 1 );

cvNamedWindow( "test", 0 );

cvShowImage( "test", image );

cvWaitKey(0);

cvReleaseHidHaarClassifierCascade( &cascade );

cvReleaseImage( &image );

}

return 0;

}

Assigns images to the hidden cascade

void cvSetImagesForHaarClassifierCascade( CvHidHaarClassifierCascade* cascade,

const CvArr* sumImage, const CvArr* sqSumImage,

const CvArr* tiltedImage, double scale );

scale=1, original window size is

used (objects of that size are searched) - the same size as specified in

cvLoadHaarClassifierCascade

(24x24 in case of "<default_face_cascade>"), if scale=2,

a two times larger window is used (48x48 in case of default face cascade).

While this will speed-up search about four times,

faces smaller than 48x48 cannot be detected.

The function cvSetImagesForHaarClassifierCascade assigns images and/or window scale to the hidden classifier cascade. If image pointers are NULL, the previously set images are used further (i.e. NULLs mean "do not change images"). Scale parameter has no such a "protection" value, but the previous value can be retrieved by cvGetHaarClassifierCascadeScale function and reused again. The function is used to prepare cascade for detecting object of the particular size in the particular image. The function is called internally by cvHaarDetectObjects, but it can be called by user if there is a need in using lower-level function cvRunHaarClassifierCascade.

Runs cascade of boosted classifier at given image location

int cvRunHaarClassifierCascade( CvHidHaarClassifierCascade* cascade,

CvPoint pt, int startStage=0 );

The function cvRunHaarHaarClassifierCascade runs Haar classifier cascade at a single image location. Before using this function the integral images and the appropriate scale (=> window size) should be set using cvSetImagesForHaarClassifierCascade. The function returns positive value if the analyzed rectangle passed all the classifier stages (it is a candidate) and zero or negative value otherwise.

Retrieves the current scale of cascade of classifiers

double cvGetHaarClassifierCascadeScale( CvHidHaarClassifierCascadeScale* cascade );

The function cvGetHaarHaarClassifierCascadeScale retrieves the current scale factor for the search window of the Haar classifier cascade. The scale can be changed by cvSetImagesForHaarClassifierCascade by passing NULL image pointers and the new scale value.

Retrieves the current search window size of cascade of classifiers

CvSize cvGetHaarClassifierCascadeWindowSize( CvHidHaarClassifierCascadeWindowSize* cascade );

The function cvGetHaarHaarClassifierCascadeWindowSize retrieves the current search window size for the Haar classifier cascade. The window size can be changed implicitly by setting appropriate scale.

Calculates disparity for stereo-pair

cvFindStereoCorrespondence(

const CvArr* leftImage, const CvArr* rightImage,

int mode, CvArr* depthImage,

int maxDisparity,

double param1, double param2, double param3,

double param4, double param5 );

The function cvFindStereoCorrespondence calculates disparity map for two rectified grayscale images.

Example. Calculating disparity for pair of 8-bit color images

/*---------------------------------------------------------------------------------*/

IplImage* srcLeft = cvLoadImage("left.jpg",1);

IplImage* srcRight = cvLoadImage("right.jpg",1);

IplImage* leftImage = cvCreateImage(cvGetSize(srcLeft), IPL_DEPTH_8U, 1);

IplImage* rightImage = cvCreateImage(cvGetSize(srcRight), IPL_DEPTH_8U, 1);

IplImage* depthImage = cvCreateImage(cvGetSize(srcRight), IPL_DEPTH_8U, 1);

cvCvtColor(srcLeft, leftImage, CV_BGR2GRAY);

cvCvtColor(srcRight, rightImage, CV_BGR2GRAY);

cvFindStereoCorrespondence( leftImage, rightImage, CV_DISPARITY_BIRCHFIELD, depthImage, 50, 15, 3, 6, 8, 15 );

/*---------------------------------------------------------------------------------*/

And here is the example stereo pair that can be used to test the example

The section discusses functions for tracking objects in 3d space using a stereo camera. Besides C API, there is DirectShow 3dTracker filter and the wrapper application 3dTracker. Here you may find a description how to test the filter on sample data.

Simultaneously determines position and orientation of multiple cameras

CvBool cv3dTrackerCalibrateCameras(int num_cameras,

const Cv3dTrackerCameraIntrinsics camera_intrinsics[],

CvSize checkerboard_size,

IplImage *samples[],

Cv3dTrackerCameraInfo camera_info[]);

The function cv3dTrackerCalibrateCameras

searches for a checkerboard of the specified size in each

of the images. For each image in which it finds the checkerboard, it fills

in the corresponding slot in camera_info with the position

and orientation of the camera

relative to the checkerboard and sets the valid flag.

If it finds the checkerboard in all the images, it returns true;

otherwise it returns false.

This function does not change the members of the camera_info array

that correspond to images in which the checkerboard was not found.

This allows you to calibrate each camera independently, instead of

simultaneously.

To accomplish this, do the following:

valid flags before calling this function the first time;valid flags after each call.

When all the valid flags are set, calibration is complete.

valid flags and

use the return value to decide when calibration is complete.

Determines 3d location of tracked objects

int cv3dTrackerLocateObjects(int num_cameras,

int num_objects,

const Cv3dTrackerCameraInfo camera_info[],

const Cv3dTracker2dTrackedObject tracking_info[],

Cv3dTrackerTrackedObject tracked_objects[]);

tracked_objects.)

const Cv3dTracker2dTrackedObject tracking_info[num_cameras][num_objects].

The id field of any unused slots must be -1. Ids need not

be ordered or consecutive.

The function cv3dTrackerLocateObjects

determines the 3d position of tracked objects

based on the 2d tracking information from multiple cameras and

the camera position and orientation information computed by

3dTrackerCalibrateCameras.

It locates any objects with the same id that are tracked by more

than one camera.

It fills in the tracked_objects array and

returns the number of objects located. The id fields of

any unused slots in tracked_objects are set to -1.